Rizwana Shahid1, *, Shazia Zeb2, Uzma Hayat3, Sumaira Yasmeen4 ,Muhammad Ali Khalid5, Prof. Muhammad Umar6

1Assistant Professor of Community Medicine, Rawalpindi Medical University, Rawalpindi

2Medical Superintendent, Holy Family Hospital Rawalpindi, Rawalpindi

3Senior demonstrator Community Medicine, Rawalpindi Medical University, Rawalpindi

4Demonstrator, Department of Medical Education, Rawalpindi Medical University, Rawalpindi

5FCPS- Part-II Trainee, Department of Ophthalmology, Benazir Bhutto Hospital, Rawalpindi 6Vice Chancellor, Rawalpindi Medical University, Rawalpindi

*Corresponding Author: Rizwana Shahid, Assistant Professor of Community Medicine, Rawalpindi Medical University, Rawalpindi, Pakistan.

Abstract

Background: One of the ways to improve the standard of our medical education is to assess the quality of Multiple-Choice Questions (MCQs) imparted in our undergraduate assessments.

Objectives: To determine the difficulty index, discrimination index, distractor effectiveness, and reliability of Multiple-Choice Questions (MCQs) of Pathology attempted by 4th-year MBBS students.

Materials & Methods: A cross-sectional descriptive research was done to analyze Pathology MCQs based test that was attempted online by 4th-year MBBS students at Medical University during January 2021 due to COVID-19 pandemic. 112 papers of high performers and 112 papers of low performers were enrolled in this study through purposive sampling. Data were analyzed by using SPSS version 25.0 and Microsoft Excel 2007. Difficulty index, discrimination index, distractor effectiveness, reliability co-efficient of MCQs was computed. An independent sample t-test was used to determine the difference between the difficulty index and the discrimination index. P-value < 0.05 was considered as significant.

Results: 60 % MCQs were labeled as easy while 40 % were moderately difficult. None of the items was very difficult. The mean difficulty index and discrimination index were 75.67 ± 12.9 and 0.27 ± 0.14, respectively. Of the total 30, MCQs detected as easy, about 50 % were poor discriminators while 50 % of the moderately difficult items were excellent discriminators. About 87.5 % of distractors were functional. Items had perfect reliability with KR-20 of 1.0. Statistically significant difference (P < 0.00) was observed between difficulty index and discrimination index on applying an independent sample t-test.

Conclusion: MCQs had sufficiently acceptable quality. Critically appraising the results of item analysis may substantially enhance the standard of our questions

Keywords: Item analysis, multiple-choice questions, difficulty index, discrimination index, reliability-coefficient.

Introduction

Multiple Choice Questions (MCQs) are valued as a multifaceted tool for the evaluation of medical education across the globe due to their capacity to assess massive students on varied topics in a very short span of time [1]. Well-structured MCQs are endowed to adequately assess the higher-ordered thinking skills of the candidates [2]. They are also proven to be the abundantly used instrument for authentically assessing the learning outcomes [3].

It is imperative to have valid, reliable, and objective assessment tool to ensure adequate reflectivity of diverse achievement levels [4]. MCQs are frequently incorporated in undergraduate as well postgraduate exams of medical students for their thorough assessment [5]. They can appraise the higher-order cognitive domains instead of just judging the memorized knowledge [6]. Moreover, they are substantially remarkable in differentiating high achievers from low achievers [7].

Item analysis of MCQs is quite advantageous in determining the quality of questions in terms of difficulty index, discrimination index, and distractor effectiveness. In addition to lack of internal consistency among tests, items can also be ruled out by psychometric analysis [8]. Its prime objective is either to revise the multiple-choice question or replace them with some other adequate difficulty level and discrimination index question so that candidates’ knowledge and competencies could be assessed in a true sense [9]. Incorporation of non-standardized options in MCQs will not only hampers recall of knowledge by the students but will also lead to guessing [10]. Good quality MCQs apart from promoting problem-solving and critical thinking skills among our students are also reflective of teachers’ remarkable efforts in their designing [11]. Amendments in MCQs in accordance with their item analysis are truly profitable in their upgradation [12].

The present study is therefore planned to scrutinize the key attributes of multiple-choice questions of Pathology send up exam administered to 4th-year MBBS students at Rawalpindi Medical University during January 2021. The concerned stakeholders would really be benefited by the valuable suggestions to amplify the standard of questions.

Materials & methods

A cross-sectional descriptive study was carried out by a analyzing Pathology send up papers attempted online by total of 336 4th-year MBBS students at Rawalpindi Medical University during January 2021 amid COVID-19-19 pandemic. Each MCQ had a scenario-based stem with 5 options. MCQs were reviewed by subject specialists before incorporation in MCQs based paper that was prepared on Google forms. Total 50 MCQs were attempted by students in one hour (60 minutes). Question papers were scored automatically in Google forms without any negative marking. Validity of this assessment was assured by deputing 16 teachers at a time to the supervisor an individual batch comprising of about 20 students. Each teacher constituted its MS Teams online link and sent it to the 4th-year coordinator for onward dissemination to students’ CR and GR. Students joined in assessment through that link and their respective supervisors monitored them during a one-hour exam by getting their cameras and mike on. The Institutional Review Board (IRB) of RMU approved this research proposal. The scores of the students were arranged from highest to lowest and upper 1/3 (112 high performing students) and lower 1/3 (112 low-performing students) were included in this study through purposive sampling with an objective to analyze their MCQs paper thoroughly. Data analysis was done by using SPSS version 25.0 and Microsoft Excel 2007. Post-exam validation of MCQs was established by calculating difficulty index, discrimination index, distractor effectiveness, and reliability co-efficient. These indices along with their interpretation are mentioned below:

Difficulty Index

Difficulty Index reflects the proportion of the students who attempted multiple-choice questions correctly. Its formula is:

Difficulty Index: No. of students who attempted MCQ item correctly × 100

Total number of students assessed

-

≤ 25--- Very difficult

-

25-75 --- Moderately difficult

≥ 75 --- Very easy13

Discrimination Index:

Discrimination Index: The ability of MCQs to discriminate high achievers from low achievers is determined by this formula [14]: Discrimination Index = 2 (UG – LG) / N

Were

- UG – No. of students in the upper group who answered the items correctly

- LG - No. of students in the lower group who answered the items correctly

- N – Total number of students assessed Interpretation of discrimination the index is as follows i. < 0.20 Poor

ii.0.20 – 0.34------- Good

iii. > 0.35------ Excellent14

Distractor Effectiveness: Distractors are incorrect options of MCQs that can distract a student. They are considered effective if chosen by students for a particular MCQ. Its formula is:

No. of high performers selecting an option – No. of low performers selecting an option

Distractor effectiveness is marked as 100 %, 66.6 %, 33.3 % and 0%depending on the presence of 0, 1, 2, and 3 distractors respectively [15].

Reliability ranges from 0.00-1.00. KR-20 > 0.90 confirms homogeneity of items [16]. The mean and median of the scores achieved were also calculated. The difference between the difficulty index and the discrimination index was computed by means of an independent sample t-test. P-value < 0.05 was taken as significant.

Results

Of the total 50 MCQs assessed in our study, about 60 % of items were very easy and 40 % were moderately difficult. However, 62 % of items were determined to have good discriminating ability as depicted below in Table 1 and Table 2.

Table 1: Difficulty index of MCQ items (n = 50)

|

Difficulty index |

Interpretation |

No. of items (%) |

|

< 25 |

Very difficult |

0 |

|

25-75 |

Moderately difficult |

20 (40%) |

|

> 75 |

Easy |

30 (60%) |

|

Difficulty Index = |

75.67 ± 12.9 |

|

Table 2: Discrimination index of MCQ items (n = 50)

|

Discrimination Index |

Interpretation |

No. of items (%) |

|

< 0.20 |

Poor |

19 (38%) |

|

0.20 – 0.34 |

Good |

17 (34%) |

|

> 0.35 |

Excellent |

14 (28%) |

|

Discrimination Index |

0.27 ± 0.14 |

|

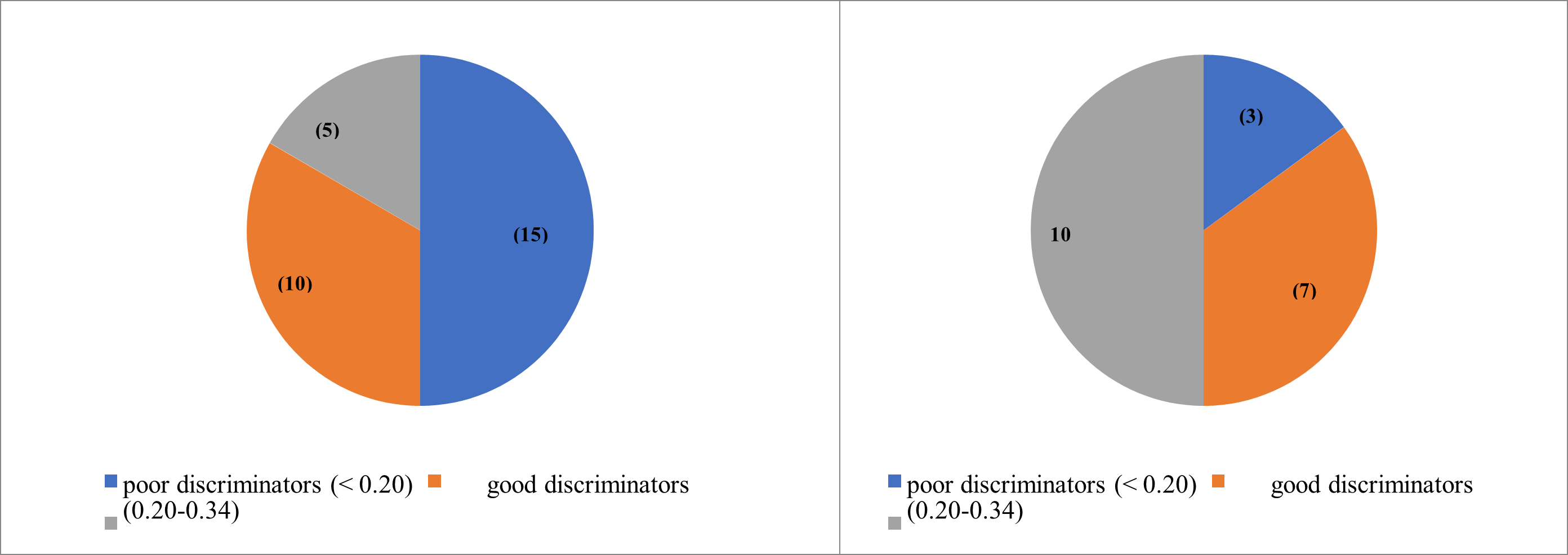

Difference between difficulty index and discrimination index was determined to be highly significant (t-test statistic = 41.37) (P < 0.000) on application of independent sample t-test. Although MCQs were found to be very easy but still they were determined to discriminate adequately between high and low performers. Out of 30 (60 %) easy MCQ, half questions had good to excellent discriminating power, while 50 % of the 20(60 %) moderator difficult questions had the excellent discriminating ability as shown below in Figure 1.

Easy MCQs (n=30) Moderately Difficult MCQs (n=20)

Figure 1: Discriminating the ability of MCQs in relation to their Difficulty level

MCQs paper was determined to have excellent reliability (KR-20 = 1.0). About 39 MCQs had 100 % distracter effectiveness. Out of total 150 marks pathology MCQ paper, mean score achieved by our students was 114.15 ±33.00 as illustrated below in Table 3.

Table 3: Distractors and other scores pertinent to MCQ items (n = 50)

|

Total Distractors |

200 |

|

Functional Distractors |

175 (87.5 %) |

|

Non-Functional Distractors |

25 (12.5 %) |

|

Items with 0 *NFD (**DE = 100 %) |

39 (78 %) |

|

Items with 1 NFD (DE = 66.6 %) |

3 (6 %) |

|

Items with 2 NFDs (DE = 33.3 %) |

5 (10 %) |

|

Items with 3 or more NFDs (DE = 0 %) |

3 (6 %) |

|

Distractor Efficiency (Mean ± SD) |

85.33 ± 30.24 |

|

Mean Score achieved by our students |

114.15 ±33.00 |

|

Mean Score of High achievers |

135.56 ± 6.9 |

|

Mean Score of Low achievers |

92.73 ± 34.9 |

|

Median Score |

126 |

|

126 |

1.0 |

*NFD – Non-Functional Distractor

**DE- Distractor Effectiveness

Discussion

Post-examination analysis of Multiple-Choice Questions (MCQs) is an imperative academic exercise. Apart from assessing the quality of questions, the overall standard of question papers is also suitably appraised by this exercise [17]. The resultant recommendations can sufficiently guide our teachers to amplify the caliber of our MCQs. Of the total 50 MCQs included in the Pathology send-up paper in the current study, 30(60 %) were found to be very easy, while 20(40 %) were determined to be moderately difficult. Not a single MCQ was labeled as very difficult. The mean difficulty index of our items was calculated to be 75.67 ± 12.9.

Similar research carried out at Rawalpindi Medical University during 2017 by scrutinizing Pharmacology MCQs paper of 4th-year MBBS students revealed that 80 % of questions were very easy and only 13.3 % were moderately difficult with a mean difficulty index of 81.64 ± 24.93 [18]. MCQs evaluation done by the faculty of Saudi Medical University indicated a mean difficulty index of 79.1 ± 3.3[8]. The mean difficulty level of our MCQs analyzed in current research is found to be average as they are neither very high as depicted following review of RMU Pharmacology paper in 2017 nor very high as concluded by item analysis of Saudi University. Another research by Patel KA et al manifested that 80 % of MCQs were moderately difficult while the rest of the 20 % needed revision as they were found to be either very easy or very difficult [19]. One of the reasons for getting 62 % MCQs in our Pathology paper as very easy might be the disruption in studies during 2020 in the wake of the COVID-19 pandemic. Not a single item was detected as very difficult for our students that could most probably be attributed to the COVID-19 pandemic that markedly disrupted face-to-face education and the non- inclusion of very difficult items depicts the intention of our teachers to facilitate the students in getting through their exams. However, sharing these item analysis results along with recommendations can facilitate our teachers to great extent in improving the standard of questions to a great extent.

About 34 % and 28 % of MCQs in the present study were determined to have a good and excellent tendency of discriminating high achievers from low achievers. The remaining 38 % were categorized as having poor discriminating tendencies. Mean discrimination index was computed to be 0.27 ± 0.14 and only 2 questions (Q No. 13 and 23) had a negative discrimination index. Similarly, research carried out to analyze MCQs of Community Medicine revealed that 2 items had a negative discrimination index [20]. MCQs with a negative discrimination index can diminish the validity of the exam and should preferably be eliminated from the paper [21]. Similar research done for item analysis of 40 MCQs illustrated that 42.5 % and 17.5 % of questions were established as excellent and good discriminators respectively [19]. Likewise, 65 MCQs incorporated in Pharmacology term exam of a private medical college in Pakistan had a mean discrimination index of 0.33 ± 0.21 which seems to be higher in comparison with our study. The discrimination index is substantially considerable when it reflects high reliability in terms of homogeneity of the items [22]. As MCQ items in the current study are also found to be homogenous that is primarily ensured by acceptable KR-20 (1.01), so discriminatory tendency of the items is an aspect that should preferentially be given due attention to improving the standard of the questions.

Of the total 200 distractors analyzed in our study, 175 (87.5 %) were functional. Constructing adequate distractors in multiple-choice questions is imperative. About 39 MCQs had 100 % distractor effectiveness and distractors in only 3 MCQs were found to be non- functional. Research by Sale AS in 2017 to assess the quality of 30 MCQs exhibited that 8 MCQs had all functional distractors while distractors in only 2 items were non-functional [23]. The construction of appropriate distractors in multiple-choice questions should be prioritized in our medical examinations. They could truly be able to differentiate between high performers and low performers [24].

The reliability coefficient (KR-20) in our research is 1.01 is reflecting perfect internal consistency among all the items of Pathology MCQs paper. A study was done to analyze 21 MCQs based tests in a medical university of Saudi Arabia that illustrated a wide range of KR-20 (0.47-0.97). One of the reasons for the low KR-20 in some of the tests was the enrollment of fewer items in one test [25]. However, this aspect could better be defended by analyzing more tests at one time. The present study is illustrating that about 50 % of the Easy MCQs attempted by our students were determined to have poor ability to discriminate high achievers from low achievers. However, 50 % moderately difficult questions were explored as excellent discriminators (Figure 1). Likewise in a research done by Rao C et for item analysis of Pathology MCQs among 2nd year MBBS students, the difficulty level was determined to be positively correlated with discrimination index [26]. Studying the association of difficultylevel with discriminatory ability may also facilitate our teachers to improve the strength of our MCQs and hence to elevate the standard of assessment.

Conclusion & recommendations

Item analysis of multiple-choice questions is a constructive approach towards the provision of an insight into their quality deemed essential for accurately assessing the students’ knowledge. Ample time should be spent on capacity building and designing standard MCQs. Structuring remarkable MCQs with apt functional distractors are necessitated to ensure objectivity and reliability of assessment.

References

- Poornima S, Vinay M. The science of constructing good Multiple- Choice Questions (MCQs). RGUHS J Med Sciences 2012; 2(3): 141-145.

- Javaeed A (2018) Assessment of higher ordered thinking in medical education: Multiple Choice Questions and Modified Essay Questions. MedEdPublish.

- M Senthil Velou, E Ahila (2020) Refine the multiple-choice questions tool with item analysis. IAIM. 7(8): 80-85.

- Kheyami D, Jaradat A, Al-Shibani T, Ali FA (2018) Item Analysis of Multiple-Choice Questions at the department of Paediatrics, Arabian Gulf University, Manama, Bahrain. Clinical and Basic Research. 18(1): e68-e74.

- Afraa M, Samir S, Ammar A (2018) Distractor analysis of multiple‑choice questions: A descriptive study of physiology examinations at the Faculty of Medicine, University of Khartoum. Khartoum Med J. 11: 1444‑53.

- McAllister D, Guidice, RM. Advantages and Disadvantages of Different Types of Test Questions. Teaching in Higher Education 2012; 17 (2):193-207.

- Christian DS, Prajapati AC, Rana BM, Dave VR (2017) Evaluation of multiple-choice questions using item analysis tool: a study from a medical institute of Ahmedabad, Gujarat. Int J Community Med Public Health. 4(6): 1876-81.

- Salih KEMA, Jibo A, Ishaq M, Khan S, Mohammed OA, et al. (2020) Psychomertic analysis of multiple-choice questions in an innovative curriculum in Kingdom of Saudi Arabia. J Family Med Prim Care. 9(7): 3663-3668.

- Halikar SS, Godbole V, Chaudhari S (2016) Item Analysis to assess quality of MCQs. Indian Journal of Applied Research. 6(3): 123-125.

- Haladyna T. Selected-response format: developing multiple- choice items. 1st ed. chapter 5; New York, NY, Routledge, 2013; 79-131.

- Tavakol M, Dennick R (2011) Post-examination analysis of objective tests. Medical Teacher. 33(6): 447-58.

- Tarrant M, Ware J, Mohammed AM (2009) An assessment of functioning and non-functioning distractors in multiple-choice questions: a descriptive analysis. BMC Medical Education. 9(1): 40.

- Mehta G, Mokhasi V (2014) Item analysis of multiple-choice questions – An assessment of the assessment tool. Int J Health Sci Res. 4(7): 197-202.

- Kaur M, Singla S, Mahajan R (2016) Item analysis of in use multiple choice questions in Pharmacology. Int J Appl Basic Med Res. 6(3): 170–173.

- Chauhan PR, Ratrhod SP, Chauhan BR, Chauhan GR, Adhvaryu A, et al. (2013) Study of Difficulty Level and Discriminating Index of Stem Type Multiple Choice Questions of Anatomy in Rajkot. Biomirror. 4(6): 1-4.

- Kuder-Richardson Formulas.

- Singh T, Gupta P, Singh D (2019) Test and Item analysis. In: Principles of Medical Education.3rd ed. New Delhi: Jaypee Brothers Medical Publishers(P) Ltd: p,70-77

- Shahid R, Farooq Q, Iqbal R (2019) Item analysis of multiple- choice questions of Ophthalmology at Rawalpindi Medical University Rawalpindi, Pakistan. RMJ. 44(1): 192-195.

- Patel KA, Mahajan NR (2013) Itemized Analysis of Questions of Multiple-Choice Question (MCQ) Exam. International Journal of Scientific Research. 2(2): 279-280.

- Chhaya J, Bhabhor H, Devalia J, Machhar U, Kavishvar A (2018) A study on quality check of Multiple-Choice Questions (MCQs) using item analysis for differentiating good and poor performing students. Healthline Journal. 9(1): 24-29.

- Patel RM (2017) Use of Item analysis to improve quality of Multiple-Choice Questions in II MBBS. Journal of Education Technology in Health Sciences. 4(1): 22-9.

- Chiavaroli N, Familari M (2011) When majority does not rule: The use of discrimination indices to improve quality of MCQs. Bioscience Education. 17(1): 1-7.

- Ingale AS, Giri PA, Doibale MK (2017) Study on item and test analysis of multiple-choice questions amongst undergraduate medical students. International Journal of Community Medicine and Public Health. 4(5): 1562-5.

- Velou MS, Ahila E (2020) Refine the multiple-choice questions tools with item analysis. IAIM. 7(8): 80-85.

- Elfaki OA, Alamri A, Salih KA (2020) Assessment of MCQs in MBBS program in Saudi Arabia. Oncology and Radiotherapy. 54(1): 1-7.

- Rao C, Kishan Prasad HL, Sajitha K, Premi H, Shetty J (2016) Item analysis of multiple-choice questions: Assessing an assessment tool in medical students. Int J Educ Psychol Res. 2(4): 201-204.